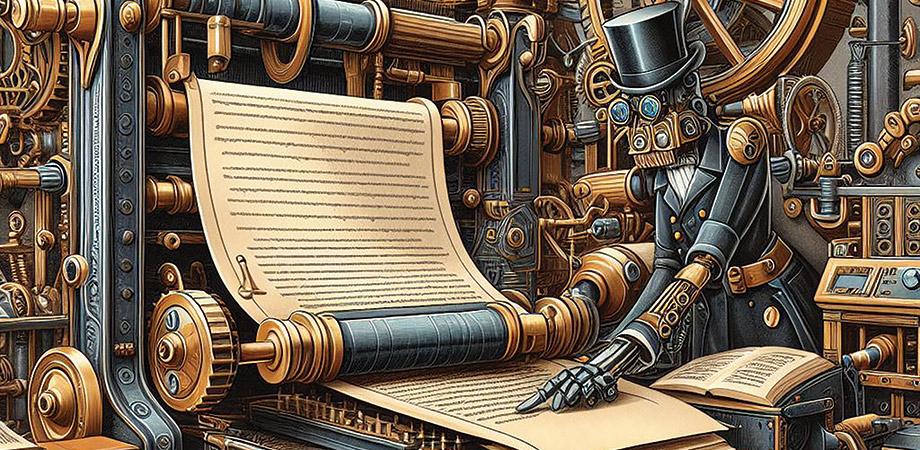

Hanging in the balance: Generative AI versus scholarly publishing

In 1454, Gutenberg’s prototype printing press began commercial operation, and the publishing industry was born. With it came a host of new concerns that are still relevant six centuries later: literacy, plagiarism, information censorship, proliferation of false or unvetted information, and—most worrisome to the Catholic church at the time—who should have access to information, what type of information could they have, and what type of people should be allowed to have it?

Many of these worries have been stirred up again at specific flashpoints in history, and most recently by the November 2022 release of ChatGPT-3. Though chatbots existed before GPT-3, that iteration introduced realistic conversation and a surprising capability for idea generation that previous iterations lacked. In the past year, development of large language models (LLMs) has been rapid (we’re already on GPT-4), and their role in society—and scholarly publishing, in particular—has been debated with equal parts anxiety and excitement. In this article we’ll weigh these issues on the balance.

Creativity/Hallucinations

“Use ChatGPT at your own peril. Just as I would not recommend collaborating with a colleague with pseudologia fantastica, I do not recommend ChatGPT as an aid to scientific writing,” writes Robin Emsley in a March 2023 editorial in the Nature journal Schizophrenia, citing the bot’s tendency to invent references. These hallucinations, as they are known, invent false information that is presented by the bot with a dispassionate factual tone that invites trust, and often requires an expert in the subject to detect.

Chatbot developers have heard that concern, and most chatbots allow users to adjust the “creativity” of responses, from very creative, dialed down to “just the facts, please.” In this conservative mode, Bing Chat, for example, does a decent job of using real references—though they all need to be double checked.

But the takeaway shouldn’t be to avoid the “creative mode” setting on LLMs altogether.

“Keep in mind that hallucinations are closely related to creativity. What is a new idea if not a hallucination about something that doesn’t yet exist?” writes Tyler Cowan, economist and author of “How to Learn and Teach Economics with Large Language Models, including GPT,” a paper with relevance far beyond economics.

In fact, LLMs excel at generating new ideas, which is one of their strengths for researchers. Darren Roblyer, professor of biomedical engineering at Boston University, and Editor-in-Chief of SPIE’s newest journal Biophotonics Discovery, says that he uses LLMs to help identify new research questions. “It’s very good for background knowledge,” he says. “You want to find out what other people have done. Has anyone used diffuse optics or OCT to monitor kidney dialysis?” He says that the LLMs can help identify research gaps, which are opportunities for new exploration.

Ideation/Authorship

LLMs excel at generating text and images, and a September 2023 article in Nature reports that more than 30 percent of 1,600 researchers surveyed relied on LLMs to generate code. Authors Richard Van Noorden and Jeffrey M. Perkel also report that 28 percent of those surveyed use LLMs to help write manuscripts, and 32 percent reported that these tools helped them write manuscripts faster—astounding rates of adoption given that few people could define LLM prior to November 2022.

This last statistic is the source of much handwringing in scholarly publishing. While chatbots excel at ideation, they cannot be held accountable for their output, so they cannot be attributed authorship in scientific studies. The Council on Publishing Ethics (COPE) takes a clear stance: “Authors are fully responsible for the content of their manuscript, even those parts produced by an AI tool, and are thus liable for any breach of publication ethics.”

Few scholarly publishers have banned usage of generative AI tools completely; most, including Springer Nature and SPIE, prohibit attributing authorship to a chatbot, but otherwise allow its use, provided that this is disclosed in the methods or acknowledgements section.

Transparency like this will be key to the adoption of AI in publishing, says Jessica Miles, Vice President for Strategy and Investments at Holtzbrinck Publishing group, who debated in favor of AI in scholarly publishing at the closing plenary of the 2023 annual meeting of the Society for Scholarly Publishing. She pointed to the €2 billion invested by the STM publishing industry since 2000 to digitize the scholarly record, make it more findable, and safeguard its integrity by developing tools to identify plagiarism and other types of fraud. She said, “These examples of how we’ve sustained trust and transparency by supporting industry-wide standards, and developed technology and infrastructure in response to transformation, provide a blueprint for how academic publishing will continue to evolve and endure in response to AI.”

Peer review/Confidentiality

Every journal editor will tell you that the greatest pain point in scholarly publishing is finding qualified and willing reviewers. Due to the surge in manuscript submissions, increasing research specialization, limited volunteer time, and a cultural shift towards work-life balance, there just aren’t enough reviewers. Can AI help?

The US National Institutes of Health (NIH) says no. In a June 2023 blog post, NIH emphasizes that using AI tools to analyze or critique NIH grant applications is a breach of confidentiality, because “no guarantee exists explaining where AI tools send, save, view, or use grant application, contract proposal, or critique data at any time.” The examples given describe uploading a proposal or manuscript to an LLM and asking it to write a first draft of the review—a two-minute task that would save hours of time for human reviewers.

But the scenario described by the NIH lacks nuance about the way AI tools can, and possibly should, be used. For example, LLMs can help reviewers conduct literature reviews without disclosing manuscript-specific information. They can also help identify overlooked seminal work in a research area. Roblyer says, “That can be really tedious for reviewers now, to find the right papers, who else has published in this area, does the current paper point to the relevant publications or not.”

Even the confidentiality issue can be overcome. Bennett Landman, chair of the Department of Electrical and Computer Engineering at Vanderbilt University and Editor in Chief of the SPIE Journal of Medical Imaging, notes that institutions like Vanderbilt are increasingly licensing privately owned LLMs that protect privacy. “That’s an addressable problem,” he says.

About the peer review question, Landman believes that rules strictly banning LLMs are futile because people will find a way around them. He continues, “All of this regulation and ‘thou-shalt-not’ generates cheating potential. Why don’t we take the problem head on? We should have an LLM input for reviews but shouldn’t mistake it for a human input. Human reviewers should be able to comment whether the LLM is correct.” He notes that by asking ChatGPT to do a first review, it would avoid duplicating effort across reviewers, and editors might get deeper knowledge from reviewers if they’re not focused on language, structure, and clarity.

Novelty/Bias

When used correctly, LLMs have the potential to help manage the enormous inflow of scholarly research that needs to be screened and vetted before it can be published. Journal editors, in particular, have to make a lot of important decisions and with limited time. They must assess a paper’s relevance to the journal and its novelty. Chatbots can present this type of abstract compare/contrast information much more clearly than traditional internet search tools—with the usual caveats that their conclusions must be validated.

“You can see it as a useful tool that helps an editor be better,” says Roblyer, “but will it incentivize the same work that’s been highly cited in the past? Will it cause the whole field to be more conservative because you’re pushing things in the direction of a model trained on old data?”

That’s one concern, as is the potential for reinforced bias: LLMs rely on statistical relationships between written words. In fact, according to Landman, “ChatGPT by definition embodies the bias of our society.” When LLMs generate new text, they can propagate word relationships that are no longer accurate, or even downright harmful.

An October 2023 study by Omiye et al. in Digital Medicine reveals that LLMs, trained on outdated and sometimes discredited medical information, can perpetuate racism. For instance, the chatbot made false race-based medical assertions when queried about lung capacity and kidney function. This raises concerns about using LLMs in healthcare for assisting with diagnoses or treatments until these biases can be resolved.

Fraud/Accessibility

Fraud, particularly from paper mills producing fake research papers, is an escalating issue in scholarly publishing that has been reported extensively in Photonics Focus and elsewhere. Previously, these fraudulent papers were easily identifiable due to poor grammar, structure, and substance. However, the advent of LLMs, which excel in generating grammatically perfect text with a specific tone, has made these fake papers harder to detect.

Or, as Landman puts it, “ChatGPT is very good at talking to us like a trusted friend, but it has no social construct that makes it know it’s our trusted friend.” LLMs are a tool without a moral compass and rely on their operators to provide one—just like every other tool.

According to Miles, “It is people, not technology like AI, that fuel these threats. People can, working collaboratively, develop and implement strategies for overcoming these crises.”

Let’s hope she is right, because this same language proficiency that makes them useful for malfeasance makes LLMs a very helpful tool for people whose first language is not English. English language editing is the primary usage reported by 55 percent of the people surveyed in Van Noorden and Perkel’s Nature paper.

LLMs make scholarly publishing more accessible to people who have historically struggled to publish and advance in their careers due to a language barrier. These tools allow a researcher to focus their efforts on the science rather than a grammatically perfect English-language manuscript.

Landman gives a useful analogy: “Before widespread use of calculators, my parents spent a lot of time learning slide rules and multiplication tricks that a cheap calculator can do now. We still learn our multiplication tables, but that education now peters out in middle school. It transforms into geometric reasoning and tables, and word problems, and structuring the problem so you can do it on a calculator.

“But we’re still writing paragraphs the same way as a 1940s textbook,” Landman continues. “If we have writing tools, much like a calculator for writing, that remind you what 7 ´ 13 is, and you don’t need to think about it, then could we teach kids to reason at a higher level earlier? Could we get people to structure the argument and the meaning of the language at a deeper level than the language itself?” And here lies one of the greatest uses of generative AI: it can potentially equalize opportunities for researchers in non-English-speaking countries. In the future, scientists might skip the effort of learning English altogether and conduct their research and write papers in their own language; submit it for publication in the same language; and rely on integrated LLMs for on-demand translation for reviewers, editors, and readers in each of their preferred languages.

The issues with generative AI chatbots are known: accountability, potential to propagate bias, and not-always-reliable accuracy. But they can also help us humans to think in different and creative ways, and to streamline tedious tasks, which may ultimately be a boon for burdened researchers.

“Think of GPTs not as a database but as a large collection of extremely smart economists, historians, scientists, and many others whom you can ask questions,” says Cowan. “If you ask them what was Germany’s GDP per capita in 1975, do you expect a perfectly correct answer? Well, maybe not. But asking them this question isn’t the best use of their time or yours. A little bit of knowledge about how to use GPT models more powerfully can go a long way.”

Six centuries after the invention of the printing press, we can say confidently that history ruled in its favor. How will history rule on the introduction of generative AI? As the balance tips back and forth, it’s not yet clear whether AI will be a boon to scholarly publishing or a thorn in its side. What is certain is that this conversation has just begun.

Gwen Weerts is Editor-in-Chief of Photonics Focus.