Toward standard tests for automotive lidar systems

To help engineers understand performance characteristics of various automotive lidar systems, SPIE recently sponsored the first large-scale, open, and noncompetitive lidar benchmarking study using calibrated targets in the field. A multidisciplinary team evaluated 10 lidar systems in this pilot project.

Held 2–3 April near Orlando, Florida, prior to the SPIE Defense + Commercial Sensing conference, it was also the first such automotive lidar system test conducted with the intent of making both the test plan and aggregated results public.

“How does anyone compare performance of lidar systems? What are the standard metrics? That is what this project is about,” says team leader Paul McManamon, CEO of Exciting Technology, an SPIE past president, and author of LiDAR Technologies and Systems and Field Guide to Lidar,” both published by SPIE Press. “This field test was not designed to be a competition, but rather an experiment to quantify lidar performance and variation. We are creating an independent framework to evaluate lidar systems.”

McManamon and lidar test and analysis expert Michigan Tech Assistant Professor of Electrical and Computer Engineering, Jeremy Bos presented the aggregated results at a presentation on 6 April at the SPIE event. A description of the automotive lidar benchmarking study with experimental analysis will be published in a forthcoming special section of Optical Engineering. The test demonstrated and quantified the elements of performance variance from device to device.

Aerial view of targets distributed on the Orlando test range. Photo credit: Cullen Bradley

Specific automotive lidar system performance results are being released only to that system’s developer, including analysis of range, resolution, and accuracy. Each participating company will see how it compared with a RIEGL laser- measurement system and within the combined dataset.

“No doubt autonomous vehicle manufacturers have devised their own lidar-system tests and evaluated engineering samples,” says Bos. “But for the automotive lidar system industry to continue its success, comparative benchmarking standards are vital.”

Bos and his team from Michigan Tech are among the first and most prolific testers of automotive lidar systems in adverse field conditions—like the snow and cold of Michigan’s Upper Peninsula. Their experience, combined with input from more than a dozen experts and volunteers contributed to the design and set-up of the SPIE-sponsored lidar test range in NeoCity, Florida.

Partners on the study included Labsphere, which manufactured and donated the calibrated targets necessary for performance measurement. RIEGL donated access to the high-end lidar system used to create the reference dataset for the site and targets, and Florida’s Osceola County provided the test site area. Other onsite partners included BRIDG, imec Florida, and SkyWater Technology.

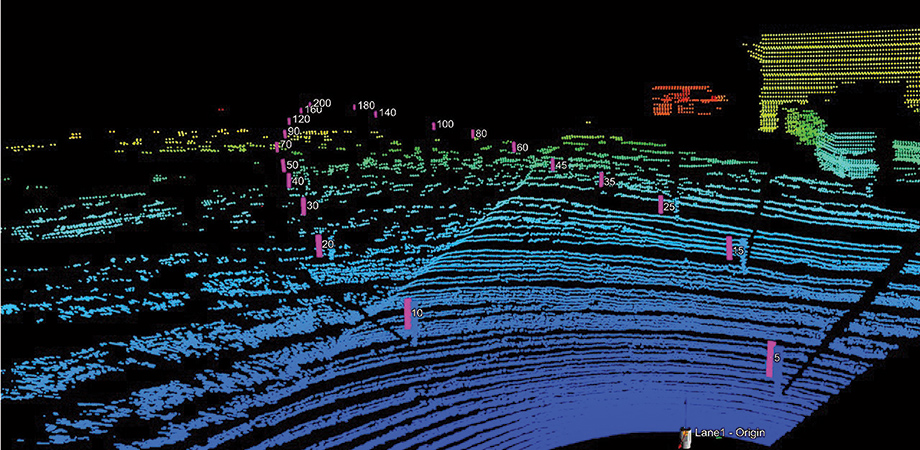

To establish an accurate ground-truth dataset as a baseline, a 3D point cloud from each automotive lidar system tested was compared to RIEGL’s survey-grade lidar system. Variations from ground truth and overall test results are currently being analyzed by Bos, Charles Kershner of the US National Geospatial-Intelligence Agency (NGA), and engineers at Exciting Technology.

The concept for such a benchmark automotive lidar test has been percolating for three years but was postponed due to COVID-19 restrictions. McManamon proposed the idea to SPIE leadership as a service to the optical engineering community. He was able to rally others, including Bos, Joseph Shaw of Montana State University, researchers at the US National Institute of Standards and Technology, the US Navy and Air Force, NGA, FEV Group, SAE International, Lockheed Martin, University of South Florida, Labsphere, REIGL, and representatives from autonomous vehicle companies.

The test included 20 calibrated targets provided by Labsphere. These 10-percent- reflectivity targets at 80-cm tall and about 15-cm wide were sized to represent a small child.

The 10 lidar systems tested were sequentially mounted on a tripod at a fixed height, with the Labsphere targets adjusted to ensure they were not obscured by natural terrain variation. Each system exported a 3D point cloud using the Rosbag format, imported to a laptop for subsequent analysis.

After gathering scene data from each lidar system, the team added complexity to the range with a suite of “confusers” such as traffic cones, barricades, and road signs adjacent to each target. The idea was to test each lidar system’s automatic gain control as the lidar detector measured reflected light from bright object to dark object and back again, across the range of targets. Such tests of dynamic range are critical in the real world given the presence of people in dark clothing or tire fragments next to highly reflective objects.

The advertised range for any of the tested lidar systems did not exceed 150 m. Two of the lidar systems tested were able to detect targets out to 200 m or more. Next April, before SPIE Defense + Commercial Sensing 2023, the team will be ready to evaluate more systems using a published test plan.

McManamon says he is more enthusiastic than ever about automotive lidar testing. “This is a multiyear process, and we are very interested in receiving feedback from the lidar community as we refine the test plan and increase the number of lidar companies involved. It’s a win-win. They get independent benchmarking data, and we make progress toward establishing performance measurement standards.”

Want to get involved? Send an email to lidar-test@spie.org and get ready for April 2023.

Peter Hallett is Director of Marketing and Industry Relations for SPIE.

| Enjoy this article? Get similar news in your inbox |

|